What is Online Machine Learing?

We understand the process of answering a sequence of questions given knowledge of the correct answers to previous questions

Posted by :  Lokesh Kumar ✪ ✪ 8 min read.

Lokesh Kumar ✪ ✪ 8 min read.

Contents

- What is online learning?

- How’s an online learning problem setup?

- Measuring performance of Online Algorithms

- Motivating uses of Online Learning

- Introducing Cover’s Impossibility Result

What is Online Learning?

In conventional machine learning, we have access to a large dataset on which we train conventional ML algorihtms. We then aim to answer queries depending on the nature of the problem (classification, regression). The performace is judged based on metrics like accuracy, MSE (mean squared error) etc. All learing of this kind fall into the sub-discipline of machine learning called Offline Learning.

Now consider the following cases where,

- Problem is so complex, that theoritical modelling and applying appropriate optimization techniques to solve them might be infeasible

- Training dataset is ridiculously large, and therefore computationally impossible to employ batch training

- ML algorihtm needs to face dynamic new patterns in the data, trend and seasonal variations in the data.

- ML algorithm must adapt to the changing dynamics of data creation, and keep itself relevant at all instances of time.

Online Learning, a sub-discipline of machine learning aims to solve these problems. Online learning agents aim to make a sequence of accurate predictions given knowledge of the correct predictions of previous tasks and optionally any other additional information.

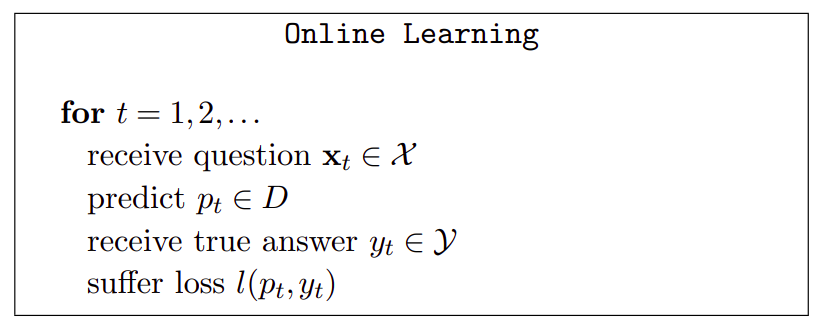

How’s an online learning problem setup?

- Online Learning is performed in a sequence of consecutive rounds/timesteps.

- At round , learner is given a question taken from the question set . The learner provides an answer after processing the input.

- After learner’s prediciton, the correct answer is revealed from the answer set .

- The learner suffers a loss which measures the error in prediction.

For example, in the case of online classification (will it rain tomorrow), the following variables take the form

- is the feature representation of weather of the day, denotes the input feature domain where input is obtained

- denotes the whether it will rain tomorrow (0 = no rain, 1 = rain). Note this is known only tomorrow, and hence all decisions must be taken with the algorithm’s estimate of (i,e)

- The loss function can be assumed to be 0-1 loss function (i,e) .

Measuring performance of Online Algorithms

We look specifically into two metrics:

- Mistake bound (valid under realizability assumption)

- Regret

Before we take problem head-on, we need to define some notations to make understanding easier. denotes the hypothesis class, a set of collection of mappings from domain to target space. The learner can choose any mapping from .

Mistake Bound

Realizability Assumption: We assume that generated by the environment is taken from a mapping . This means, a mapping in the set of allowed mapping which exactly matches the true dynamics of the environment (generation of ).

Under this assumption, the aim of the learning agent is to find as soon as possible from . For the online learning algorithm, , we denote by the maximum number of mistakes might commit on a sequence of examples labelled by . A bound on is called the mistake-bound and we must design algorihtms with minimal . Its easy to understand that if the problem is not realizable,

Regret

Now we can relax the realizability assumption we no longer require the answers () to be generated by some . We want the algorithm to be competitive with the best fixed predictor from . This notion of “regret” measures how much algorithm has suffered in terms of loss till now, for having not followed the some predictor . So, defining the regret wrt to some

Loss accumulated by the algorithm from to is . The loss accumulated by choosing a for all timesteps is . The regret definition now is straightforward and is,

Regret of the relative to the hypothesis class , is the maximum achievable regret

Motivating uses of Online Learning

I’m providing a set of problem which can be modelled as online learning problems. We will begin by addressing the Prediction from expert advice problem.

Prediction from expert advice

Consider a simple scenario where in a single day, the learning agent must decide on whether to engage in a transaction or not. Engaging in a transaction can result in profit or loss, which is decided by factors out of agents control (environment). Agent has access to a set of experts who suggest the agent whether to engage in transaction or not, when the day starts.

The agent must use a strategy to combine the advices of the expert and take the appropriate action (engage in transaction or not) so as to maximize the profits it receives.

Food for Thought: We can approach this problem in a batch learning fashion. Store the advices of the experts (0 = donot engage in transaction, 1 = engage in transaction) for timesteps, constructing the data matrix . Agent always engages in a transaction, thereby knowing whether transaction at a particular day is profitable or not which constucts a 0-1 vector, (0 for loss, 1 for profit). Now we have a supervised offline version of this problem, which can be solved by employing classification algorihtms. Is it a good approach?

Online spam filtering

Emails arrive into the system and the classifier must identify whether the new email is a spam or not. Note that the learner must learn to adapt dynamically to reject adversarially generated spam emails to target the users.

Lets represent each incoming email at time using feature vector . Assume we have a linear spam filter whose weights are . Prediction Rule (-1 denotes spam, +1 denotes valid). Its clear that the predictor must dynamically update itself, so we define a convex loss function and the predictor must update itself (change appropriately) such that it minimizes the total loss till the given time .

Recommendation systems

No online learning text is complete without mention of recommendation systems which revolutionized entertainment and e-retail industry. Recommendation system problem can be cast as a matrix completion problem. Lets consider a user-item matrix where we have customers and items (may be songs, movies etc).

In online setting, at each iteration the algorithm outputs a preference matrix (its estimate of ) where (all possible 0-1 matrix). Now, environment chooses a user-item pair along with the real preference for this pair . Thus the loss experienced by the algorihtm is

We generally have other priors on the preference matrix such as its a low rank matrix which we can leverage on.

Introducing Cover’s Impossibility Result

Consider an online binary classification, and 0-1 loss . On each round learner receives and predicts . Then is revealed by the environment and pays a loss .

We now will show that achieving sub-linear regret is not possible = Cover’s Impossibility Result

Finite Hypothesis Class Assumption:

where .

Now lets say, the environment waits for to predict and explicitly sets . Well, this is completely possible and this is bad news. This means that commits a mistake at all time steps starting from to . What about the second term?

Consider a sequence of , the best estimator (which is present in ) is where is the majority label in the above sequence. This means the number of mistakes made by is atmost . Therefore, the regret of any online algorithm is atlest which is not sublinear with .

Dont get demotivated, we have a way around by restricting the environment’s “power”.

Since this is a roadblock and no further analysis can be done, we now impose some restrictions on the adversary/environment so that meaningful analysis can be done.

- Realizability of the Adversary

- Randomization of Algorithm Prediction

Randomization of Algorithm Prediction: Here we randomize the algorithm’s prediction. Therefore, the adversary may be aware of the probability distribution of the algorithms outcome, but not the actual .

Next time, we will see some algorihtms (randomized weighted majority, Winnow, Perceptron) and their regret bounds. Comment down your suggestions and would love to hear your feedback!